Community AI Chatbot

Building a custom-trained LLM for a Discord Community.

The Vision: A Smarter Community Assistant

Standard Discord bots are often limited to pre-programmed commands. My goal was to create something far more dynamic: a true AI companion for our server. I wanted a bot that could understand the context of conversations, remember past interactions, answer questions based on our community's specific knowledge, and even adopt a unique personality. This project was an exercise in practical Large Language Model (LLM) application, from data curation to real-time deployment.

Project Architecture

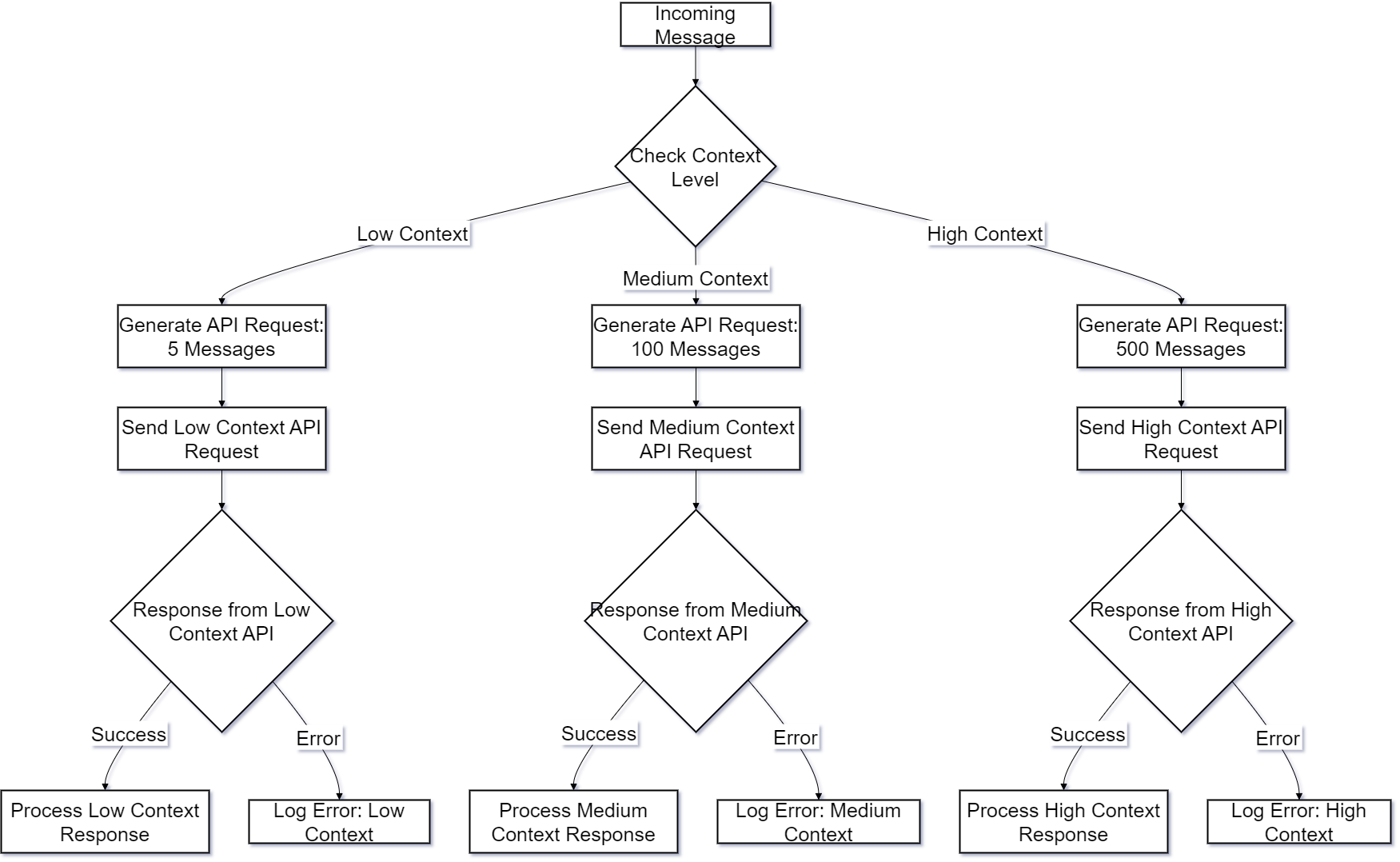

The system connects a powerful language model to the Discord platform through a lightweight Python backend. It's designed to be interactive and responsive, listening for mentions or specific commands to activate and join the conversation. The core challenge wasn't just connecting to an API, but ensuring the bot's responses were relevant, coherent, and genuinely useful to the community it served.

Technical Implementation

Data Curation and Fine-Tuning

To give the bot its unique personality and knowledge base, I didn't use a generic, off-the-shelf model. Instead, I curated a dataset from our server's chat logs (with user privacy in mind). This data was cleaned and transformed into a high-quality, instruction-based format suitable for fine-tuning. I used this dataset to fine-tune a base language model (like Llama or a smaller distilled model), effectively teaching it our community's slang, inside jokes, and common topics. This step was critical for moving beyond generic responses to truly contextual interactions.

Real-Time Interaction with Discord.py

The bot's connection to the server is handled by a Python application using the `discord.py` library. This backend is responsible for:

- Listening for events, such as being mentioned (`@BotName`) in a channel.

- Fetching recent conversation history to provide context for the LLM.

- Constructing a carefully engineered prompt that includes the context and the new user query.

- Sending the prompt to the fine-tuned LLM, receiving the generated response, and posting it back to the Discord channel in real-time.

Key Challenges & Learnings

One of the biggest challenges was managing conversation context. LLMs are stateless, so providing a concise yet comprehensive history of the recent chat was essential for coherent replies. I implemented a rolling window for conversation history to keep the input prompt efficient. Another learning was the importance of prompt engineering; small changes to the instructions given to the model could dramatically alter the tone and quality of its responses. This project was a fantastic hands-on lesson in the full lifecycle of a modern AI product, from data-centric training to live deployment and interaction.